by Steve Cunningham

Ever since the Compact Disc became the standard currency of the audio realm, there has been a need to make its 16-bit, 44.1kHz audio files smaller. Whether your goal is to stream your audio from a website, email a spec spot to a client across the country, or just squeeze another CD’s worth of classic rock on your station’s automation system, you’ll likely use some form of audio compression to do the job. This month we’ll look at some of these compression codecs and what they actually do to your sound.

Thanks in part to the original Napster and online music sharing, the mp3 file format is perhaps the most widely-used audio compression format in the world. But the times are a-changing — Napster is busy re-inventing itself as a paid download music service, and mp3.com has just been sold to CNET Networks, where it will become a music information-only website with no downloads. Meanwhile new codecs are being developed that will significantly improve the quality of compressed audio, and may unseat the mp3 as the leading compression format.

CD QUALITY

The CD standard calls for analog audio to be sampled 44,100 times every second. The instantaneous analog voltage at each sample is converted to a digital number using 16 bits, or digits, to describe the voltage. That’s why we refer to CD audio as being 16-bits and 44.1kHz. Doing the math, we generate 1,411 kilobits of data for every second of stereo audio we record at CD quality (16 x 44,100 x 2 = 1,411,200 bits per second). That’s why CD audio files are so large, and why we use audio compression codecs (codec = enCOder/DECoder) to make them smaller.

Early compression codecs simply threw away sample to reduce the effective file size, and they produced noticeable audio artifacts that sounded bad. The telephone company used techniques that sampled audio at 12-bits and 32kHz (or less).

Most modern data compression techniques are far more sophisticated, and they take advantage of the fact that we don’t perceive everything that is recorded in the studio. After all, recording silence uses up as much disc space as does recording speech. So several companies developed codecs that eliminated data based on known audio phenomena. These codecs are collectively known as Perceptual Audio Coders, and they take advantage of effects including equal-loudness contours, masking, and redundancy.

EQUAL LOUDNESS CONTOURS

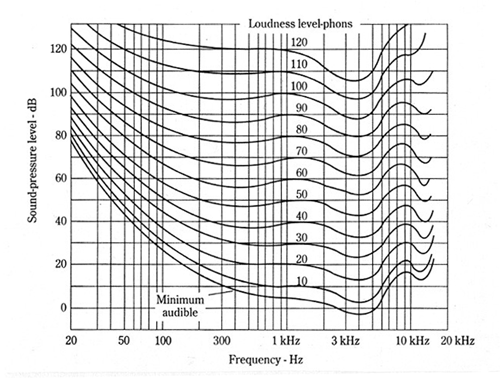

The human ear is not equally sensitive to all frequencies, particularly in the low and high frequency ranges. The ear’s response to frequencies over the entire audio range has been charted, originally by Fletcher and Munson in 1933, with later revisions by others, as a set of curves showing the sound pressure levels of pure tones that are perceived as being equally loud. These curves are plotted for each 10 dB rise in level with the reference tone being at 1 kHz. These are also called loudness level contours and the Fletcher-Munson curves (see figure 1).

The curves are lowest in the range from 1 to 5 kHz, with a dip at 4 kHz, indicating that the ear is most sensitive to frequencies in this range. So intensity level of higher or lower tones must be raised substantially in order to create the same impression of loudness. The lowest curve in the diagram represents the Threshold of Hearing, the highest the Threshold of Pain.

The normal range of the human voice is from about 500Hz to about 2kHz, with fricatives extending to over 5kHz. (Fricatives include the “s” sound in “sit” and the “sh” sound in “show”. I always wanted a de-fricative-izer instead of a de-esser). The lower frequencies in human speech tend to be vowels, while the higher frequencies tend to be consonants. But the sounds that make speech intelligible exist mostly between 1kHz and 3kHz, which explains in part why the telephone companies limit the bandwidth of phone audio.

It turns out that the A, B, and C weighting networks on sound level meters were derived as the inverse of the 40, 70 and 100 dB Fletcher-Munson curves. When you’re looking at a new pair of monitors or a power amp and the frequency response is given with an A, B, or C weighting, you’ll now know that the response has been adjusted to account for that particular equal loudness curve.

MASKING

Our world regularly presents us with a multitude of sounds simultaneously. Usually we perceive each of these sounds separately, but only pay attention to the ones that are important to us. Unless there’s something we want to hear but cannot, we’re unaware of all the sounds that we don’t hear in the course of a day.

It is often difficult to hear one sound when a much louder sound is present. This process seems intuitive, but on the psychoacoustic and cognitive levels it becomes very complex. The term for this process is masking, and it’s one of the most-researched effects in audio.

Masking as defined by the American Standards Association (ASA) is the amount (or the process) by which the threshold of audibility for one sound is raised by the presence of another (masking) sound. For example, a loud car stereo can mask the car’s engine noise.

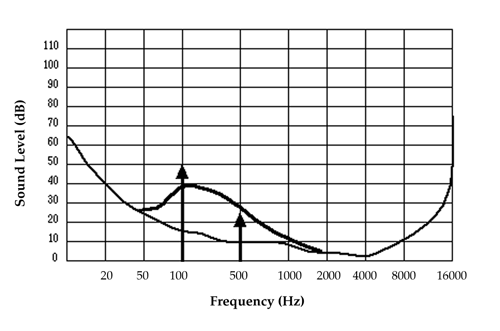

While we may think of the frequency spectrum from 20Hz to 20kHz as continuous, our brains don’t treat the audio spectrum as a continuum at all. Our brains perceive sound through roughly 32 distinct critical bands, each of which has a different bandwidth. For example, at 100Hz, the width of the critical band is about 160Hz, while at 10kHz it is about 2.5kHz in width. In figure 2, the softer sound is masked by the louder sound at a lower frequency because they both occupy the same critical band.

There’s no agreement regarding the exact number of critical bands in human hearing, and the bands seem to overlap. But the significance of critical bands is that a louder sound can effectively mask a softer one even though the louder sound is not the same frequency, so long as it is within the same critical band as the softer sound. And because the bandwidth of these critical bands increases with frequency, low frequency sounds generally mask higher frequency sounds more easily.

In addition to masking in the frequency domain, our auditory system also has a masking effect in the time domain. A loud sound will affect our perception of softer sounds not only after it, but before it as well. A softer sound that occurs within 15 milliseconds prior to a loud one will be masked by the louder sound. This is called backward masking. And softer sounds that occur as much as 200 milliseconds after a loud sound will also be masked. This effect is known as forward masking, and it is due to the fact that the cochlear membrane in our ears takes about 200 milliseconds to recover from a loud sound, and be ready to interpret a softer sound.

REDUNDANCY

This is the simplest effect to understand — we’re all aware of the fact that stereo signals often contain the same audio in both channels. By analyzing a stereo audio file and eliminating redundant sounds, the final file size can be significantly reduced. The MPEG-1 Layer 3 spec refers to the mode where this is done as Joint-Stereo.

PERCEPTUAL CODERS

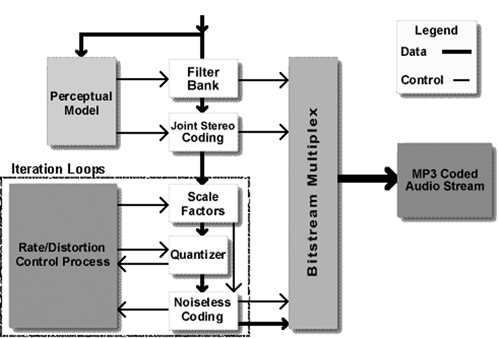

As mentioned, perceptual audio coders take advantage of the way human hearing works. The mp3 encoding process is the simplest to describe here (see figure 3), especially since the exact processes used by Real and Microsoft are proprietary. But all perceptual coders work in the same basic fashion.

Using DSP, the audio is divided into 32 bands. This process uses convolution filters and is known as sub-band filtering. The objective is to simulate the 32 critical bands of human hearing. Once the audio is divided, a perceptual model (called the psychoacoustic model) that’s built-in to the algorithm analyzes the contents of each of the bands to determine which of the sounds in a particular band are likely to be masked by the strongest sounds in the same band. The sounds that will be masked are then discarded.

Additionally, the remaining sound in each of the bands is requantized (a fancy word for “re-sampled at a lower bit-rate” to use fewer bits) so that any quantizing error ends up just below the masking threshold.

Further data reduction is achieved by temporal masking. The sampled audio is broken up in to blocks, typically 10 milliseconds in length, and the blocks are then analyzed for temporal maskers (louder sounds that will mask sounds before or after). Taking advantage of both forward and backward masking, some of the audio data can be discarded because of the presence of temporal maskers. Finally, the finished audio stream is formatted for storage on disk.

MPEG layers 1, 2 and 3 all use perceptual audio encoding, as do Dolby’s AC3 (also known as Dolby Digital and used in the movies) and Sony’s ATRAC (used in the Minidisc). Another application of perceptual audio coding is Ibiquity’s PAC (Perceptual Audio Coder, which was developed by Lucent) used in its IBOC DAB scheme. PAC is also used by XM and Sirius for their satellite-delivered audio services.

The finished quality of encoded audio is, of course, dependent on the bit-rate at which it was encoded. Most mp3 encoders will let you encode audio at bit rates from 64kbps up to 320kbps. Remember, CD audio is about 1500kbps, so the compression is quite effective. However, bit rates below 128kbps can generate quite audible artifacts, including but not limited to high-frequency whistling, low frequency distortion, and a general loss of frequency response. The higher the bit rate, the better the sound.

In my opinion, 128kbps is fine for sending a spot to a client for approval. But for delivery of finished product, I normally encode at 192kbps or better. The difference in quality is noticeable.

MPEG-4 AND AAC

Perhaps the most exciting recent development in perceptual coders is the AAC encoder that is part of the MPEG-4 standard. This is the codec used by Apple for its iTunes Store to encode downloadable music. AAC was jointly developed by the MPEG group that includes Dolby, Fraunhofer (the original mp3 guys), AT&T, Sony, and Nokia. It’s also being used by Digital Radio Mondiale (DRM), who hope to bring high fidelity (“near-FM”) digital audio to the AM bands in Europe.

So far, AAC looks like a winner. I’ve participated in several listening tests over the past months, and to my ears AAC audio that was encoded at 128kbps sounds as good as mp3 audio at 192kbps, if not better. The iTunes program that plays AAC files is now available for Windows as well as the Mac, but until it and other AAC players become widely available we’ll probably have to stick with mp3 for most work. Still, it will be interesting to see where this goes.

TIPS FOR ENCODING AUDIO

It’s great to be able to encode audio, using any codec including Real and Windows Media, at the highest possible rate. But that’s not always practical, especially if you’re going to stream the audio via the Internet, and if your target listener may be listening on a dial-up connection. In that case, you just have to do the very best you can. However, there are some things you can do in production to make your audio more “encoder friendly,” particularly since you now know how they work.

Avoid Heavy Compression. This is one of the toughest tips to follow, especially if your client is a car dealer (need I say more?). But high compression ratios, especially when combined with short attack and release times, can generate pumping and breathing artifacts that don’t give the codec accurate information about what to throw away. If the background noise level is coming up louder between sentences, the codec might decide to throw out some of the VO around the noise instead, and will almost certainly generate artifacts.

Band-limit Your Sound. Since you know that there’s not much going on in speech below 200Hz, why not reduce the bass yourself instead of letting the encoder do it for you? The same goes for high-frequency material, some of which may get tossed if it’s in the same critical band as the VO. I’d rather make my own compromises in EQ beforehand than leave the decision to the computer algorithm. This is particularly important if your audio will ultimately be streamed.

Mind Your Levels. Even short bits of distorted audio, even if they’re not audible or objectionable to you, will drive a codec nuts. Distortion generates high-frequency content, and again you risk having good sound eliminated during the brief periods of distortion. Remember, your sound will be broken into little 10 millisecond blocks, and analyzed for temporal masking. That’s when the distortion can throw the codec off.

Balance. Keep the important sounds hot enough in the mix to ensure that they won’t be eliminated during encoding. Keep your overall level high enough without getting close to distortion and you’ll be fine.

Happy squashing!

♦